AI Weekly Rundown: (May 7- May 13)

Open-sourced models excel silently while enterprises announce major updates

Hello, Engineering Leaders and AI enthusiasts,

Another action-packed week in AI.

Here are the most impactful AI developments from last week.

A quick summary:

OpenAI released Shap·E for text-to-3D generation🏗️

NVIDIA introduced Real-Time Neural Appearance Models🎨

RasaGPT: The first headless LLM chatbot platform released🤖

Multimodal GPT: AI conversational model trained on vision and language announced👁️

Anthropic proposed AI constitution to train AI models on moral values📜

Meta announced AI Fusion ‘ImageBind’: 6 data types in 1 AI model🔗

OpenAI shared model to examine LLM behavior🔍

Google’s AI takeover: Multiple AI products and features announced🌐

Hugging Face announced Transformers Agent: Makes it easy to compose LLM models that solve multimodal tasks🕵️♂️

Anthropic introduces 100k context windows, giving Claude bigger “memory” than ChatGPT🤯

Stability AI releases a powerful text-to-animation tool 🚀

OpenAI released Shap·E for text-to-3D generation

OpenAI has released a new project called Shap·E, a conditional generative model designed to generate 3D assets using implicit functions that can be rendered as textured meshes or neural radiance fields.

Shap·E was trained using a large dataset of paired 3D assets and their corresponding textual descriptions. An encoder was used to map 3D assets into the parameters of an implicit function, and a conditional diffusion model was used to learn the conditional distribution of the implicit function parameters given the input data. It has demonstrated remarkable performance in producing high-quality outputs in just seconds.

NVIDIA introduced Real-Time Neural Appearance Models

NVIDIA Research shared a research paper that discusses a system for real-time rendering of scenes with complex appearances previously reserved for offline use. It is achieved with a combination of algorithmic and system-level innovations.

The appearance model uses learned hierarchical textures interpreted through neural decoders that generate reflectance values and importance-sampled directions. The decoders incorporate two graphics priors to support accurate mesoscale reconstruction and efficient importance sampling, facilitating anisotropic sampling and level-of-detail rendering.

RasaGPT: The first headless LLM chatbot platform released

RasaGPT is the first headless chatbot platform built on top of Rasa and Langchain, which provides a boilerplate and reference implementation of Rasa and Telegram using an LLM library for indexing, retrieval, and context injection.

It is designed to work on:

➡ Creating your own proprietary bot end-point using FastAPI, document upload, and “training” pipeline included

➡ How to integrate Langchain/LlamaIndex and Rasa

➡ Library conflicts with LLM libraries and passing metadata

➡ Dockerized support on MacOS for running Rasa

➡ Reverse proxy with chatbots via ngrok

➡ Implementing PGVector with your own custom schema instead of using Langchain’s highly opinionated PGVector class

➡ Adding multi-tenancy (Rasa doesn't natively support this), sessions, and metadata between Rasa and your own backend/application

Multimodal GPT: AI conversational model trained on vision and language announced

MultiModal-GPT is a vision and language model designed to conduct multi-round dialogue with humans. It can follow instructions, generate captions, count objects, and answer questions.

It is a parameter-efficient model fine-tuned from OpenFlamingo. It uses a Low-rank Adapter (LoRA) and is Trained using a unified instruction template for both language and visual instruction data.

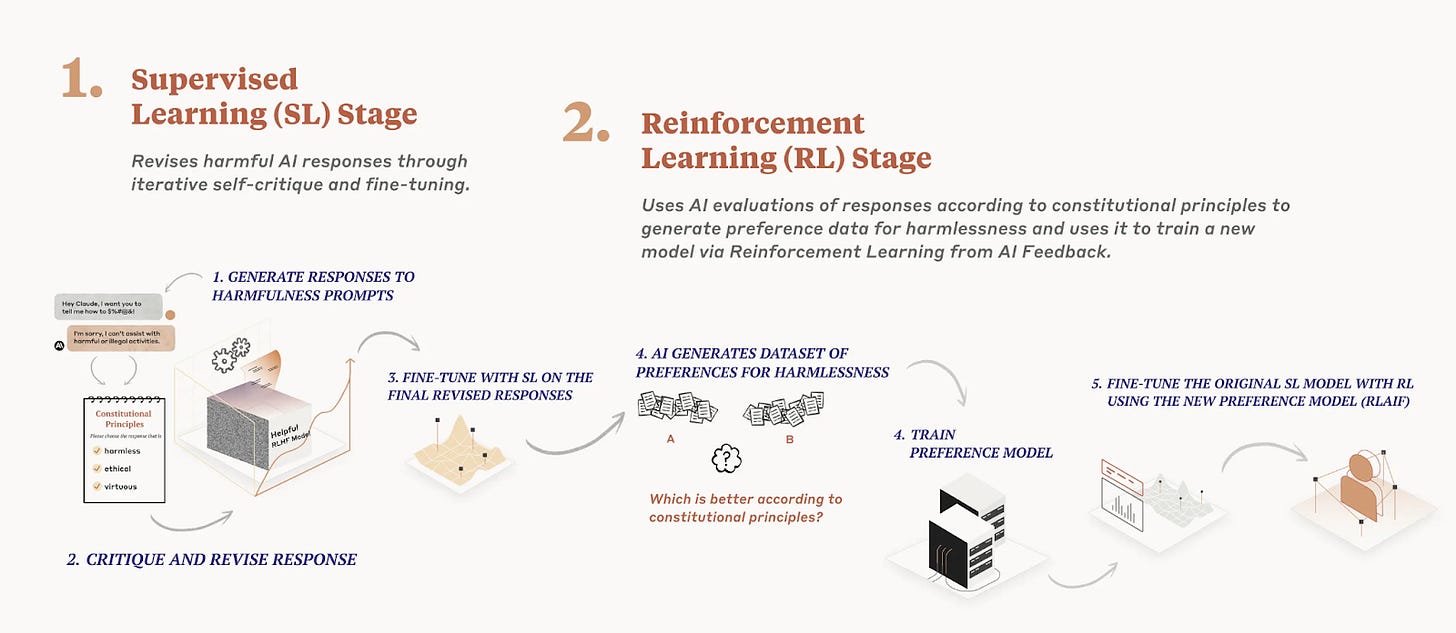

Anthropic proposed AI constitution to train AI models on moral values

The Alphabet-backed startup disclosed the full set of principles, which it calls Claude's constitution, used to train and make Claude safe. Unlike ChatGPT’s human feedback-based model, which may be biased, Anthropic gives Claude a set of written moral values to read and learn from as it makes decisions on how to respond to questions.

The value guidelines draw from various sources, including the United Nation’s Universal Declaration of Human Rights, Apple’s Terms of Service, and more.

Meta announced AI Fusion ‘ImageBind’: 6 data types in 1 AI model

Meta has announced the new open-source AI model called ‘ImageBind’ that links together multiple data streams- text, audio, visual data, temperature, and movement readings. ImageBind is the first to combine 6 data types into a single embedding space.

The company also notes that other streams of sensory input could be added to future models, including touch, speech, smell, and brain fMRI signals.

OpenAI shared model to examine LLM behavior

OpenAI is developing a tool that uses GPT-4 to examine an LLM's behaviors and anticipate problems with AI systems. The tool generates explanations for neurons (in neural networks) and scores their accuracy by having GPT-4 predict how they would behave.

The code is available on GitHub but is still in the early stages of development. The researchers generated explanations for all 307,200 neurons in GPT-2.

Google’s AI takeover: Multiple AI products and features announced

Palm 2, a new LLM with 540B parameters, multimodality, and 100+ languages.

Gemini, its new multimodal foundation model that will rival GPT.

Opened global beta access for Bard in 180+ countries.

Introduces AI coding bot for Android developers.

Launches generative AI features in search with conversation and image contextualization features.

Announced a flurry of AI features for Google Workspace in Sheets, Docs, Gmail, and more.

Introduced DuetAI: A collaborative AI partner for Google cloud. Help with code, search, and workflows.

Announced ML Hub. It'll help devs train and launch AI models.

Announced collab with Adobe to provide advanced AI art generation features.

Hugging Face announced Transformers Agent: Makes it easy to compose LLM models that solve multimodal tasks

Hugging Face has launched a new feature called Transformers Agents, which enables users to control more than 100,000 HF models through text, images, video, audio, and documents. This feature breaks down the barrier to entry for machine learning and makes it more accessible to all.

Anthropic introduces 100k context windows, giving Claude bigger “memory” than ChatGPT

Anthropic expands Claude’s context window from 9K to 100K tokens, corresponding to around 75,000 words! It means you can now submit hundreds of pages of materials for Claude to digest and analyze, and conversations with Claude can go on for hours or even days.

The context window size is an important parameter in language models like GPT. Models with small context windows tend to “forget” the content of very recent conversations, even their initial instructions– after a few thousand words or so. Claude’s larger “memory” can be game-changing for LLMs.

Stability AI releases a powerful text-to-animation tool

Stability AI released Stable Animation SDK, a tool designed for developers and artists to implement the most advanced Stable Diffusion models to generate stunning animations. It allows using all the models, including Stable Diffusion 2.0 and Stable Diffusion XL. And it offers three ways to create animations:

Text to animation

Initial image input + text input

Input video + text input

That's all for now!

If you are new to ‘The AI Edge’ newsletter. Subscribe to receive the ‘Ultimate AI tools and ChatGPT Prompt guide’ specifically designed for Engineering Leaders and AI enthusiasts.

Thanks for reading!

![[video-to-gif output image] [video-to-gif output image]](https://substackcdn.com/image/fetch/$s_!5VdE!,w_1456,c_limit,f_auto,q_auto:good,fl_lossy/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F7c1d1eb5-af47-46af-8dc8-b56261abcf1c_600x338.gif)